Algorithms shape modern life, influencing decisions and perceptions. Safiya Noble’s work reveals how search engines embed biases, perpetuating systemic oppression and racial stereotypes in digital spaces.

A Society, Searching

In today’s digital age, society relies heavily on search engines to navigate information, often unaware of the biases embedded in their algorithms. These tools shape perceptions, influencing how marginalized communities are viewed. A striking example is the search for “black girls,” which often yields demeaning results, reflecting systemic racism. Safiya Noble critiques how such outcomes perpetuate harmful stereotypes, highlighting the need for ethical considerations in algorithm design. This societal reliance on biased systems underscores the urgent need for transparency and accountability in technology development. By examining these issues, we can better understand how algorithms reinforce oppression and work toward creating more equitable digital spaces for all.

Searching for Black Girls

The search for “black girls” often leads to problematic results, dominated by pornographic content and stereotypes. This highlights how algorithms perpetuate racism and sexism, marginalizing Black women and girls. Safiya Noble emphasizes that these outcomes are not accidental but are shaped by historical and systemic biases embedded in technology. Such results dehumanize Black girls, reducing them to harmful tropes and reinforcing societal oppression. This digital erasure and misrepresentation have real-world consequences, contributing to the invisibility and exclusion of Black women in broader cultural narratives. Noble argues that addressing these issues requires a critical examination of how algorithms are designed and the societal structures they reflect. By challenging these systems, we can work toward more inclusive and equitable digital spaces that celebrate diversity rather than suppress it.

Searching for People and Communities

Searching for marginalized communities often reveals skewed representations shaped by algorithmic biases. These biases prioritize profit over ethical considerations, leading to the dominance of oppressive content. Marginalized groups, such as Black women, are frequently excluded from mainstream narratives or reduced to stereotypes. Safiya Noble highlights how these exclusions reflect broader societal inequities, perpetuating systemic oppression. The algorithms governing search engines categorize and rank information in ways that reinforce racial and gendered hierarchies. This digital marginalization limits the visibility of diverse voices and fosters a narrow, biased public discourse. By examining these patterns, Noble calls for a critical reevaluation of how search technologies shape our understanding of identity and community. Addressing these issues is essential for creating more equitable and inclusive digital spaces that reflect the full richness of human experience;

The Role of Search Engines in Reinforcing Racism

Search engines like Google perpetuate systemic racism by marginalizing communities of color, reinforcing harmful stereotypes through biased algorithms and prioritizing oppressive content over diverse perspectives and equitable representation.

Searching for Protections from Search Engines

Protecting individuals from biased search engine outcomes requires a dual approach. First, ethical algorithms must be developed to ensure equitable representation of marginalized groups. This involves integrating diverse perspectives into the design process to mitigate racial and gender biases. Second, users need to be educated about how search engines operate, enabling them to critically assess the information they encounter. Additionally, policymakers must enforce regulations to hold tech companies accountable for promoting non-discriminatory content. By addressing these issues, society can move toward a more inclusive digital environment where search engines serve as tools for empowerment rather than oppression.

The Future of Knowledge in the Public

The future of knowledge in the public sphere hinges on addressing algorithmic biases that currently distort information. Safiya Noble emphasizes the need for ethical algorithms that prioritize equity and inclusivity. By reimagining how search engines operate, we can create systems that amplify marginalized voices rather than suppress them. This requires collaboration between tech developers, policymakers, and communities to ensure diverse perspectives are integrated into algorithmic design. Public awareness campaigns can also empower users to critically engage with digital content. Ultimately, the goal is to foster a digital landscape where knowledge is accessible, accurate, and free from systemic oppression, enabling a more informed and equitable society for all.

Algorithms and Their Impact on Marginalized Groups

Algorithms perpetuate stereotypes, disproportionately affecting marginalized groups like Black women, who face systemic racism and sexism embedded in digital spaces, as highlighted in Algorithms of Oppression.

The Future of Information Culture

The future of information culture hinges on addressing algorithmic bias. Safiya Noble emphasizes the need for ethical frameworks to ensure equitable representation and challenge oppressive digital systems. By fostering diverse perspectives in tech development, we can create more inclusive algorithms that amplify marginalized voices. This requires a collective effort to dismantle systemic biases embedded in search engines and other platforms. Education and advocacy are critical in raising awareness about algorithmic oppression. Ultimately, a reimagined information culture must prioritize transparency, accountability, and justice to empower all communities equitably in the digital age.

Algorithmic Oppression and Its Consequences

Algorithmic oppression perpetuates systemic biases, reinforcing racial and gender stereotypes through search engine results. Safiya Noble highlights how marginalized groups, particularly Black women, face dehumanizing portrayals in online spaces. For instance, searches for “Black girls” often yield pornographic or demeaning content, reflecting broader societal biases embedded in algorithms. This perpetuates harmful stereotypes, erasing the complexity and humanity of Black women. Such biases have real-world consequences, including job discrimination, mental health impacts, and exclusion from opportunities. Algorithmic oppression also marginalizes communities by prioritizing dominant narratives, further entrenching inequality. Addressing these issues requires acknowledging the role of algorithms in perpetuating oppression and advocating for equitable digital systems that amplify diverse voices and challenge systemic racism.

Ethical Considerations in Algorithm Development

Ethical algorithm development requires frameworks to mitigate biases, ensuring diverse perspectives. Marginalized communities must be centered to address systemic oppression and create fair, equitable digital environments.

The Need for Ethical Algorithms

The development of ethical algorithms is critical to address systemic biases embedded in search engines and digital platforms. Safiya Noble’s work highlights how algorithms perpetuate racial and gender stereotypes, emphasizing the urgent need for ethical frameworks to guide their creation. These frameworks must prioritize transparency, accountability, and inclusivity, ensuring that marginalized voices are represented in the design process. By integrating diverse perspectives, developers can create algorithms that promote equity and challenge oppressive structures. Ethical algorithms not only mitigate harm but also foster a more just digital environment, where information reflects the complexities of human experiences rather than reinforcing harmful biases.

The Role of Diversity in Tech

Diversity in tech is essential to combat algorithmic oppression, as homogeneous teams often perpetuate biases. Safiya Noble’s research underscores how the lack of diverse perspectives in algorithm development leads to systems that marginalize communities. By incorporating diverse voices, tech companies can identify and challenge stereotypes embedded in their technologies. Diversity ensures that algorithms reflect the complexity of human experiences, reducing harm to marginalized groups. However, diversity alone is not sufficient; it must be paired with inclusive practices that value and amplify underrepresented perspectives. The tech industry must prioritize equity and representation to create ethical and equitable digital spaces. This shift requires systemic change, fostering environments where diverse teams can thrive and contribute meaningfully to algorithmic design.

Case Studies and Real-World Examples

Safiya Noble’s work highlights real-world examples of algorithmic bias, such as search results for “Black girls” prioritizing negative stereotypes, illustrating systemic oppression embedded in tech.

The Case of Black Women and Search Engines

Safiya Noble’s research reveals how search engines perpetuate systemic racism and sexism, particularly against Black women. When searching for terms like “Black girls,” results often prioritize pornography or stereotypical portrayals, reflecting broader societal biases. These algorithms reinforce harmful stereotypes, marginalizing Black women and reducing their representation to simplistic or demeaning narratives. Noble argues that such outcomes are not accidental but are instead rooted in the design of algorithms that prioritize profit over equity. This case highlights how technology can amplify oppression, making it essential to critically examine and challenge the structures behind these systems. By exposing these biases, Noble calls for a more equitable approach to technology development, ensuring that marginalized voices are centered in the design process.

The Representation of Marginalized Groups Online

Safiya Noble’s work emphasizes how marginalized groups face systemic misrepresentation online due to biased algorithms. Search engines often prioritize content that reflects dominant stereotypes, pushing harmful narratives about race, gender, and sexuality. This digital marginalization limits the visibility of diverse voices and perpetuates exclusion. Noble argues that these systems reinforce social hierarchies, making it difficult for marginalized groups to challenge oppressive norms. The lack of diverse perspectives in algorithm development exacerbates this issue, creating a cycle of oppression. Addressing this requires intentional efforts to diversify tech teams and implement ethical guidelines that prioritize equity. Without such changes, marginalized groups will continue to face digital erasure and misrepresentation, further entrenching societal inequalities.

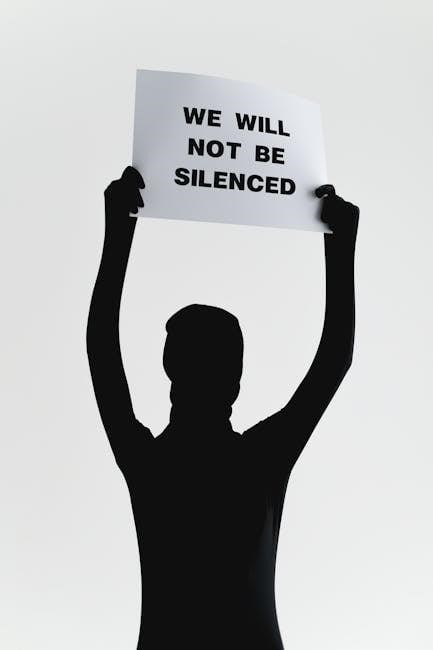

Noble’s work underscores the urgent need for ethical algorithmic reforms to combat systemic racism and sexism. Marginalized communities demand equitable digital representation and justice in technology development.

The Conclusion: Algorithms of Oppression

In her groundbreaking analysis, Safiya Noble reveals how search algorithms perpetuate racial and gender biases, marginalizing Black women and other minority groups. She argues that these technologies, far from being neutral, reflect and amplify societal oppressions. Noble emphasizes the urgent need for ethical reforms in tech development to address systemic discrimination. By examining how search engines like Google prioritize profit over equity, she exposes the deep-rooted issues in algorithmic design. The book calls for diverse representation in tech and the creation of inclusive digital spaces. Noble’s work challenges readers to rethink the role of technology in perpetuating inequality and advocates for a future where algorithms serve marginalized communities rather than oppress them.

The Epilogue and Final Thoughts

In the epilogue, Safiya Noble reflects on the broader implications of algorithmic oppression, urging society to confront the systemic biases embedded in technology. She emphasizes the need for accountability and transparency in tech development, advocating for inclusive practices that prioritize marginalized voices. Noble calls for a reimagining of digital spaces where equity and justice are central to design. She underscores the importance of diverse representation in tech to challenge the dominant narratives perpetuated by current algorithms. Ultimately, Noble offers a hopeful vision: a future where technology serves to empower, rather than oppress, and where marginalized communities are seen and valued. Her work concludes with a powerful reminder of the ethical responsibility to create a more just digital world.